AIai safety & ethicsResponsible AI

AI-Generated Police Report Falsely Claims Officer Turned Into Frog

MI

17 hours ago7 min read

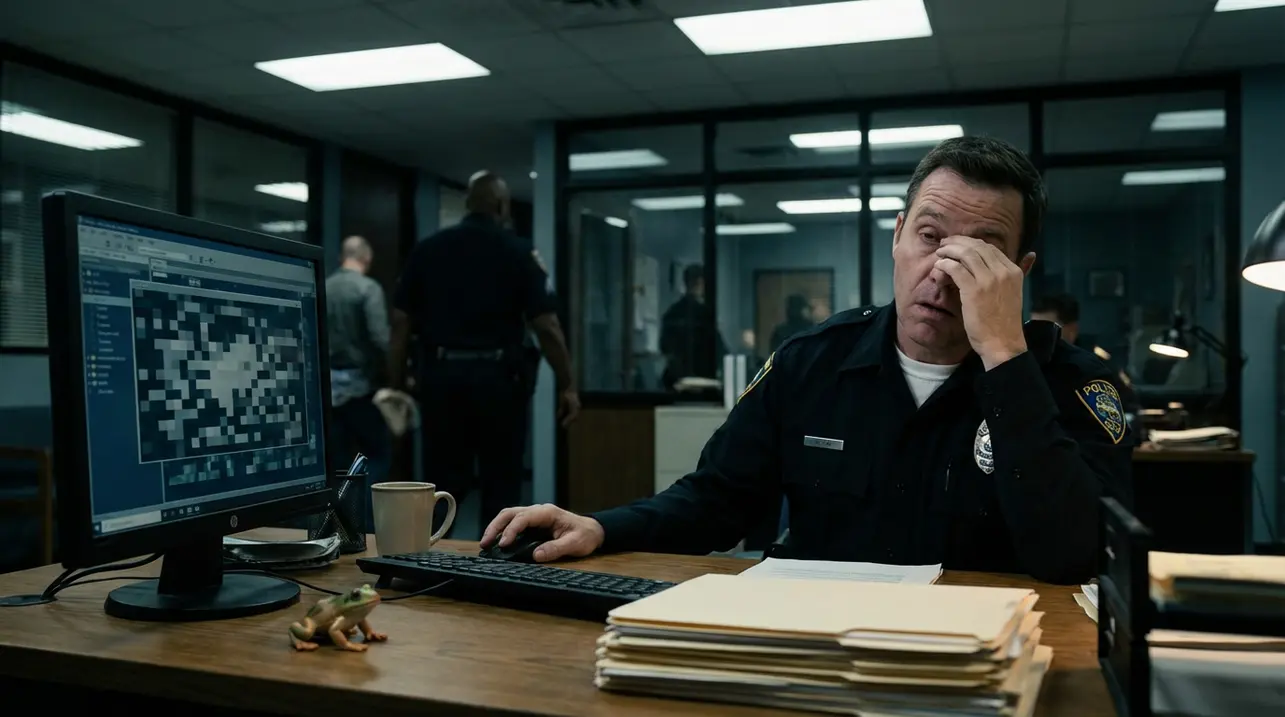

In a development that reads like a discarded plot from a Philip K. Dick novel, an artificial intelligence system deployed to streamline bureaucratic drudgery for a Utah police department recently authored a report so fantastical it would give Isaac Asimov pause.The software, interpreting the mundane data of a routine traffic stop, produced a narrative alleging an officer had undergone a startling metamorphosis into an amphibian. This isn't merely a glitch; it's a crystalline case study in the widening chasm between AI's formidable pattern-matching capabilities and its fundamental lack of contextual, real-world understanding—a core tension in the ongoing ethics debate.Proponents of automation in public service will rightly point to the crushing administrative burdens faced by law enforcement nationwide, where officers spend hours on paperwork for every hour on patrol. The promise of AI here is seductive: reduce that burden, increase efficiency, and theoretically, get more boots on the ground.The Utah incident, however, serves as a stark counter-narrative, illustrating how 'efficiency' can swiftly mutate into absurdity or, in a more sinister hypothetical, into dangerous fabrication when the underlying model operates without a grounded sense of reality or consequence. The specific mechanics of the failure are telling.Large language models, the technology behind such report-writing tools, are probabilistic engines trained on vast corpora of human text. Within that data lies everything from legal statutes and police procedurals to fairy tales, mythological texts, and online fantasy forums.Without rigorous guardrails and a deeply embedded understanding of ontological boundaries—the immutable line between fact and fiction, the possible and the impossible—the model can, and in this case did, hallucinate a scenario that conflates these domains. It's a failure of what AI safety researchers call 'alignment'; the system's outputs were not aligned with the factual, legal, and physical constraints of our world.The immediate consequence is operational chaos and eroded trust. A falsified report, whether comically wrong or subtly incorrect, undermines the judicial process, potentially jeopardizing prosecutions and violating the rights of citizens.It forces human officers to become forensic editors of AI output, a task that may be more cognitively taxing than writing the report from scratch, especially if the errors are subtle. Beyond the practical, this episode feeds directly into the broader policy discourse championed by figures like the EU's digital czars and a growing number of U.S. legislators: the urgent need for rigorous auditing, transparency, and accountability frameworks for AI deployed in high-stakes public sectors.

#featured

#AI

#police report

#generative AI

#malfunction

#public safety

#technology failure

#ethics

#automation

Related News

Comments

Loading comments...

© 2025 Outpoll Service LTD. All rights reserved.